Latest 10 recent news (see index)

June 14, 2025

XBPS 0.60

What’s Changed

-

libxbps: fix issues with updating packages in unpacked state. duncaen -

libxbps: run all scripts before and after unpacking all packages, to avoid running things in a half unpacked state. duncaen -

libxbps: fix configuration parsing with missing trailing newline and remove trailing spaces from values. eater, duncaen -

libxbps: fix XBPS_ARCH environment variable if architecture is also defined in a configuration file. duncaen -

libxbps: fix memory leaks. ArsenArsen -

libxbps: fix file descriptor leaks. gt7-void -

libxbps: fix temporary redirect in libfetch. ericonr -

libxbps: fix how the automatic/manual mode is set when replacing a package using replaces. This makes it possible to correctly replace manually installed packages using a transitional packages. duncaen -

libxbps: fix inconsistent dependency resolution when a dependency is on hold. xbps will now exit withENODEV(19) if a held dependency breaks the installation or update of a package instead of just ignoring it, resulting in an inconsistent pkgdb. #393 duncaen -

libxbps: fix issues withXBPS_FLAG_INSTALL_AUTOwhere already installed packages would get marked automatically installed when they are being updated while installing new packages in automatically installed mode. #557 duncaen -

libxbps: when reinstalling a package, don’t remove directories that are still part of the new package. This avoids the recreation of directories which trips up runsv, as it keeps an fd to the service directory open that would be deleted and recreated. #561 duncaen -

xbps-install(1): list reinstalled packages. chocimier -

xbps-install(1): in dry-run mode, ignore out of space error. chocimier -

xbps-install(1): fix bug where a repo-locked dependency could be updated from a repository it was not locked to. chocimier -

xbps-fetch(1): make sure to exit with failure if a failure was encountered. duncaen -

xbps-fetch(1): fix printing uninitialized memory in error cases. duncaen -

xbps-pkgdb(1): remove mtime checks, they are unreliable on fat filesystems and xbps does not rely on mtime matching the package anymore. duncaen -

xbps-checkvers(1): with--installedalso list subpackages. chocimier -

xbps-remove(1): fix dry-run cache cleaning inconsistencies. duncaen -

xbps-remove(1): allow removing “uninstalled” packages (packages in the cache that are still up to date but no long installed) from the package cache by specifying the-O/--clean-cacheflag twice. #530 duncaen -

xbps-query(1):--catnow works in either repo or pkgdb mode. duncaen -

xbps-query(1):--list-repos/-Llist all repos including ones that fail to open. chocimier -

xbps.d(5): describe ignorepkg more precisely. chocimier -

libxbps,xbps-install(1),xbps-remove(1),xbps-reconfigure(1),xbps-alternatives(1): addXBPS_SYSLOGenvironment variable to overwrite syslog configuration option. duncaen -

libxbps: Resolve performance issue caused by the growing number of virtual packages in the Void Linux repository. #625 duncaen -

libxbps: Merge the staging data into the repository index (repodata) file. This allows downloading the staging index from remote repositories without having to keep the two index files in sync. #575 duncaen -

xbps-install(1),xbps-query(1),xbps-checkvers(1),xbps.d(5): Added--stagingflag,XBPS_STAGINGenvironment variable andstaging=true|falseconfiguration option. Enabling staging allows xbps to use staged packages from remote repositories. duncaen -

xbps-install(1),xbps-remove(1): Print package install and removal messages once, below the transaction summary, before applying the transaction. #572 chocimier -

xbps-query(1): Improved argument parsing allows package arguments anywhere in the arguments. #588 classabbyamp -

xbps-install(1): Make dry-run output consistent/machine parsable. #611 classabbyamp -

libxbps: Do not url-escape tilde character in path for better compatibility with some servers. #607 gmbeard -

libxbps: use the proper ASN1 signature type for packages. Signatures now have a.sig2extension. #565 classabbyamp -

xbps-uhelper(1): add verbose output forpkgmatchandcmpversubcommands if the-v/--verboseflag is specified. #549 classabbyamp -

xbps-uhelper(1): support multiple arguments for many subcommands to improve pipelined performance. #536 classabbyamp -

xbps-alternatives(1): Add-R/--repositorymode to-l/--listto show alternatives of packages in the repository. #340 duncaen -

libxbps: fix permanent (308) redirects when fetching packages and repositories. duncaen -

xbps-remove(1): ignores file not found errors for files it deletes. duncaen -

libxbps: thepreservepackage metadata is now also respected for package removals. duncaen -

xbps-pkgdb(1): new--checksallows to choose which checks are run. #352 ericonr, duncaen

Full Changelog: https://github.com/void-linux/xbps/compare/0.59.2...0.60

April 01, 2025

Introducing Void Linux: Enterprise Edition

In today’s fast-paced digital landscape, businesses must continuously innovate to remain competitive and drive growth. That is why we are thrilled to unveil our latest solution, Void Linux: Enterprise Edition. Leveraging cutting-edge technology, this next-generation operating system offers unparalleled value, superior return on investment (ROI), and exceptional operational excellence.

Transform Your Infrastructure with Next-Generation Technology

Void Enterprise sets itself apart from traditional enterprise solutions by delivering a more secure, stable, and high-performance experience for your business-critical applications. Our solution is built upon the proven foundation of Void Linux, renowned for its reliability and robustness in data centers and cloud environments.

Our team of experts has meticulously designed each component to work harmoniously together, resulting in seamless integration and efficient resource utilization. This streamlined infrastructure not only minimizes operational costs but also maximizes your IT resources’ potential.

Enhance Operational Excellence with Automation and Simplicity

At the heart of Void Enterprise lies its commitment to simplifying complex processes. By automating repetitive tasks and providing intuitive management tools, our solution empowers your IT team to focus on more strategic initiatives that drive business growth.

We believe in giving back control to administrators, which is why we have included a comprehensive suite of automation features designed specifically for enterprise environments. With Void Enterprise, you can effortlessly manage infrastructure provisioning, configuration, and updates without the need for extensive scripting knowledge or manual intervention.

Improve ROI with Cloud-Optimized Infrastructure

As businesses increasingly move toward hybrid and multi-cloud strategies, Void Enterprise ensures seamless integration with popular cloud platforms. This enables organizations to maximize their investment in existing infrastructure while easily extending resources into the cloud to support evolving business demands.

Our solution comes equipped with advanced containerization capabilities, allowing you to quickly scale applications and workloads without over-provisioning or wasting resources. This results in improved ROI as your IT team can efficiently allocate resources and achieve desired outcomes at a lower total cost of ownership.

Seamlessly Integrate with Your Existing Infrastructure

We understand that migrating to new technology can be challenging. That’s why Void Enterprise Edition is designed for easy integration with your existing infrastructure. Our solution provides robust compatibility with an extensive range of applications, ensuring minimal disruption during the transition process.

Our dedicated team is committed to providing top-notch support and assistance throughout every stage of your journey toward operational excellence. From initial deployment to ongoing maintenance, we’ve got you covered.

Void Linux: Enterprise Edition represents a quantum leap forward in enterprise technology solutions. It delivers value, improves ROI, and enhances operational excellence by combining the power of next-generation technology with unmatched ease of use and seamless integration capabilities.

Get ready to elevate your business operations to new heights with Void Linux: Enterprise Edition. Experience the future of IT infrastructure today!

You can find Void Linux Enterprise images for x86_64 and x86_64-musl on our downloads page and on our many mirrors.

Contact your Void Enterprise distributor or systems integrator to purchase a license key today!

You may verify the authenticity of the images by following the instructions in the handbook, and using the following minisign key information:

untrusted comment: minisign public key 4D951FCB5722B6A4

RWSktiJXyx+VTT+tvaAOgJY5iLlt1tiQw6q3giH1+Fs2J7RnYaAewRHw

February 02, 2025

February 2025 Image Release: Arm64 Extravaganza

We’re pleased to announce that the 20250202 image set has been promoted to current and is now generally available.

You can find the new images on our downloads page and on our many mirrors.

This release introduces support for several arm64 UEFI devices:

Live ISOs for aarch64 and aarch64-musl should also support other arm64

devices that support UEFI and can run a mainline (standard) kernel.

Additionally, this image release includes:

- Linux 6.12 in live ISOs

- Xfce 4.20 in

xfce-flavored live ISOs - Linux 6.6.69 in Raspberry Pi PLATFORMFSes and images

xgenfstab, a new script fromxtoolsto simplify generation of/etc/fstabfor chroot installs

and the following changes:

- Fixed issue where systems with Nvidia graphics cards would not boot without

nomodeset(void-packages #52545) - Added a bootloader menu entry to disable graphics by setting

nomodeset(void-mklive380f0fd) - Added additional hotkeys in the bootloader menu. See the handbook

for a full listing

(void-mklive

380f0fd) - Raspberry Pi platform images are now smaller by default, but will grow the root partition to fit the storage

device upon first boot using

growpart. See the handbook for more details (void-mklive #379) void-installernow includes a post-installation menu to enable services on the installed system (void-mklive #389)rpi-aarch64andrpi-aarch64-muslPLATFORMFSes and platform images should now support the recently-released Raspberry Pi 500 and CM5.

You may verify the authenticity of the images by following the instructions in the handbook, and using the following minisign key information:

untrusted comment: minisign public key 4D56E70F102AF9F9

RWT5+SoQD+dWTeOdNuc4Q/jq2+3+jpql7+JJp4WukkxTdpsZlk2EGuPj

September 29, 2024

Goodbye, Python 2! Hello, New Buildbot!

At long last, Void is saying goodbye to Python 2. Python ended support for

Python 2 in 2020, but Void still had over 200 packages that depended on it.

Since then, Void contributors have

updated, patched, or removed

these packages. For the moment, Python 2 will remain in the repositories as

python2 (along with python2-setuptools and python2-pip). python is

now a metapackage that will soon point to python3.

One of the biggest blockers for this project was some of Void’s own infrastructure: our buildbot, which builds all packages for delivery to users. For a long time, we were stuck on buildbot 0.8.12 (released on 21 April 2015 and using Python 2), because it was complex to get working, had many moving parts, and was fairly fragile. To update it to a modern version would require significant time and effort.

Now, we move into the future: we’ve upgraded our buildbot to version 4.0, and it is now being managed via our orchestration system, Nomad, to improve reliability, observability, and reproducibility in deployment. Check out the 2023 Infrastructure Week series of blog posts for more info about how and why Void uses Nomad.

Visit the new buildbot dashboard at build.voidlinux.org and watch your packages build!

July 12, 2024

Welcome a New Contributor!

The Void project is pleased to welcome aboard another new member, @tranzystorekk.

Interested in seeing your name in a future update here? Read our Contributing Page and find a place to help out! New members are invited from the community of contributors.

March 14, 2024

March 2024 Image Release (and Raspberry Pi 5 support)

We’re pleased to announce that the 20240314 image set has been promoted to current and is now generally available.

You can find the new images on our downloads page and on our many mirrors.

Some highlights of this release:

- A keymap selector is now shown in LightDM on XFCE images (@classabbyamp in #354)

- The chrony NTP daemon is now enabled by default in live images

(@classabbyamp in

abbd636) - Raspberry Pi images can now be installed on non-SD card storage without manual configuration on models that support booting from USB or NVMe (@classabbyamp in #361)

- Raspberry Pi images now default to a

/bootpartition of 256MiB instead of 64MiB (@classabbyamp in #368)

rpi-aarch64* PLATFORMFSes and images now support the Raspberry Pi 5.

After installation, the kernel can be

switched

to the Raspberry Pi 5-specific rpi5-kernel.

You may verify the authenticity of the images by following the instructions on the downloads page, and using the following minisign key information:

untrusted comment: minisign public key A3FCFCCA9D356F86

RWSGbzWdyvz8o4nrhY1nbmHLF6QiFH/AQXs1mS/0X+t1x3WwUA16hdc/

January 22, 2024

Changes to xbps-src Masterdir Creation and Use

In an effort to simplify the usage of xbps-src,

there has been a small change to how masterdirs (the containers xbps-src uses

to build packages) are created and used.

The default masterdir is now called masterdir-<arch>, except when masterdir

already exists or when using xbps-src in a container (where it’s still masterdir).

Creation

When creating a masterdir for an alternate architecture or libc, the previous syntax was:

./xbps-src -m <name> binary-bootstrap <arch>

Now, the <arch> should be specified using the new -A (host architecture)

flag:

./xbps-src -A <arch> binary-bootstrap

This will create a new masterdir called masterdir-<arch> in the root of your

void-packages repository checkout.

Arbitrarily-named masterdirs can still be created with -m <name>.

Usage

Instead of specifying the alternative masterdir directly, you can now use the

-A (host architecture) flag to use the masterdir-<arch> masterdir:

./xbps-src -A <arch> pkg <pkgname>

Arbitrarily-named masterdirs can still be used with -m <name>.

January 22, 2024

Welcome New Contributors!

The Void project is pleased to welcome aboard 2 new members.

Joining us to work on packages are @oreo639 and @cinerea0.

Interested in seeing your name in a future update here? Read our Contributing Page and find a place to help out! New members are invited from the community of contributors.

January 04, 2024

glibc 2.38 Update Issues and Solutions

With the update to glibc 2.38, libcrypt.so.1 is no longer provided by

glibc.

Libcrypt is an important library for several core system packages that use

cryptographic functions, including pam. The library has changed versions, and

the legacy version is still available for precompiled or proprietary

applications. The new version is available on Void as libxcrypt and the legacy

version is libxcrypt-compat.

With this change, some kinds of partial upgrades can leave PAM unable to

function. This breaks tools like sudo, doas, and su, as well as breaking

authentication to your system. Symptoms include messages like “PAM

authentication error: Module is unknown”. If this has happened to you, you can

either:

- add

init=/bin/shto your kernel command-line in the bootloader and downgrade glibc, - or mount the system’s root partition in a live environment,

chroot

into it, and install

libxcrypt-compat

Either of these steps should allow you to access your system as normal and run a full update.

To ensure the disastrous partial upgrade (described above) cannot happen,

glibc-2.38_3 now depends on libxcrypt-compat. With this change, it is safe

to perform partial upgrades that include glibc 2.38.

October 24, 2023

Changes to Repository Sync

Void is a complex system, and over time we make changes to reduce this

complexity, or shift it to easier to manage components. Recently

through the fantastic work of one of our maintainers classabbyamp

our repository sync system has been dramatically improved.

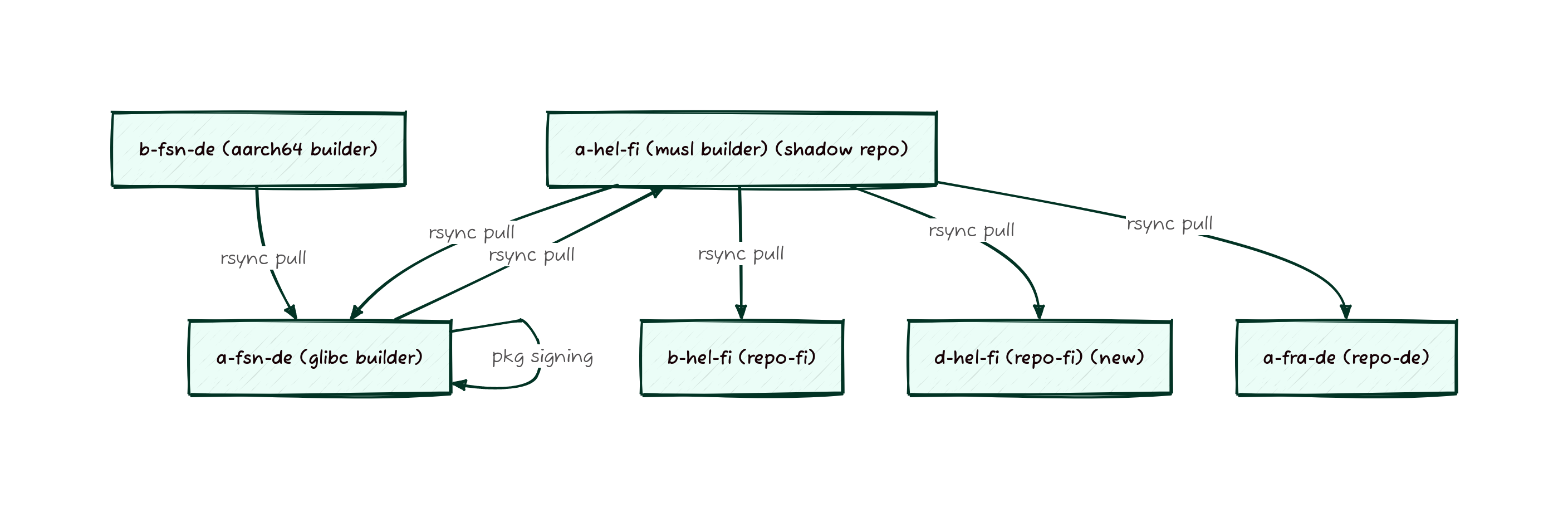

Previously our system was based on a series of host managed rsyncs running on either snooze or cron based timers. These syncs would push files to a central location to be signed and then distributed. This central location is sometimes referred to as the “shadow repo” since its not directly available to end users to synchronize from, and we don’t usually allow anyone outside Void to have access to it.

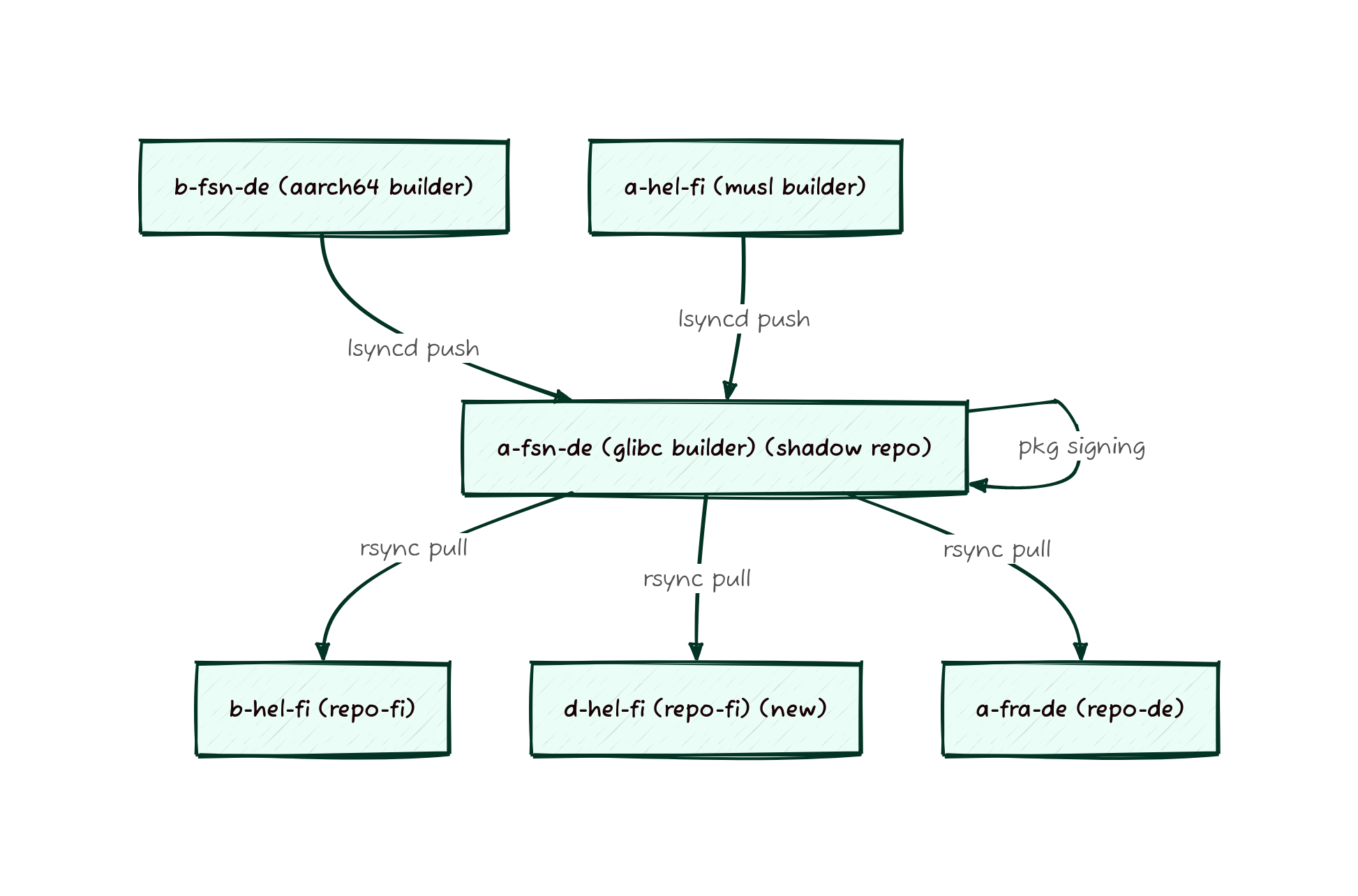

As you might have noticed from the Fastly Overview the packages take a long path from builders to repos. What is not obvious from the graph shown is that the shadow repo previously lived on the musl builder, meaning that packages would get built there, copied to the glibc builder, then copied back to the musl builder and finally copied to a mirror. So many copies! To streamline this process, the shadow mirror is now just the glibc server, since that’s where the packages have to wind up for architectural reasons anyway. This means we were able to cut out 2 rsyncs and reclaim a large amount of space on the musl builder, making the entire process less fragile and more streamlined.

But just removing rsyncs isn’t all that was done. To improve the time it takes for packages to make it to users, we’ve also switched the builders from using a time based sync to using lsyncd to take more active management of the synchronization process. In addition to moving to a more sustainable sync process, the entire process was moved up into our Nomad managed environment. Nomad allows us to more easily update services, monitor them for long term trends, and to make it clearer where services are deployed.

In addition to fork-lifting the sync processes, we also forklifted void-updates, xlocate, xq-api (package search), and the generation of the docs-site into Nomad. These changes represent some of the very last services that were not part of our modernized container orchestrated infrastructure.

Visually, this is what the difference looks like. Here’s before:

And here’s what the sync looks like now, note that there aren’t any cycles for syncs now:

If you run a downstream mirror we need your help! If your mirror

has existed for long enough, its possible that you were still

synchronizing from alpha.de.repo.voidlinux.org, which has been a dead

servername for several years now. Since moving around sync traffic is

key to our ability to keep the lights on, we’ve provisioned a new

dedicated DNS record for mirrors to talk to. The new

repo-sync.voidlinux.org is the preferred origin point for all sync

traffic and using it means that we can transparently move the sync

origin during maintenance rather than causing an rsync hang on your

sync job. Please check where you’re mirroring from and update

accordingly.